Tesla CEO Elon Musk fought court cases on opposite coasts on Monday, raising a key question about the entrepreneur that could either accelerate his plan to put self-driving Teslas on streets or throw up a major roadblock: Can this billionaire, who has a tendency to exaggerate, really be trusted?

In Miami, Florida, a Tesla driver who slammed into a woman out stargazing said he put too much faith in his car’s Autopilot program before the deadly crash.

“I trusted the technology too much,” said George McGee.

“I believed that if the car saw something in front of it, it would provide a warning and apply the brakes.”

Meanwhile, regulators arguing an Oakland, California case tried to place exaggerated claims about the Autopilot technology at the center of a request to suspend Tesla from being able to sell cars in the state.

Musk’s tendency to talk big — whether about his electric cars, his rockets or his government costing-cutting efforts — have landed him in trouble with investors, regulators and courts before, but rarely at such a delicate moment.

After his social media spat with President Donald Trump, Musk can no longer count on a light regulatory touch from Washington.

Meanwhile, Tesla sales have plunged and a hit to his safety reputation could threaten his next big project: rolling out driverless robotaxis — hundreds of thousands of them — in several U.S. cities by the end of 2026.

The Miami case holds other dangers, too. Lawyers for the family of the dead woman, Naibel Benavides Leon, recently convinced the judge overseeing the jury trial to allow them to argue for punitive damages. A car crash lawyer not involved in the case, but closely following it, said that could cost Tesla tens of millions of dollars, or possibly more.

“I’ve seen punitive damages go to the hundreds of millions, so that is the floor,” said Miguel Custodio of Los Angeles-based Custodio & Dubey.

“It is also a signal to other plaintiffs that they can also ask for punitive damages, and then the payments could start compounding.”

Tesla did not reply for a request for comment.

That Tesla has allowed the Miami case to proceed to trial is surprising. It has settled at least four deadly accidents involving Autopilot, including payments just last week to a Florida family of a Tesla driver.

That said, Tesla was victorious in two other jury cases, both in California, that also sought to lay blame on its technology for crashes.

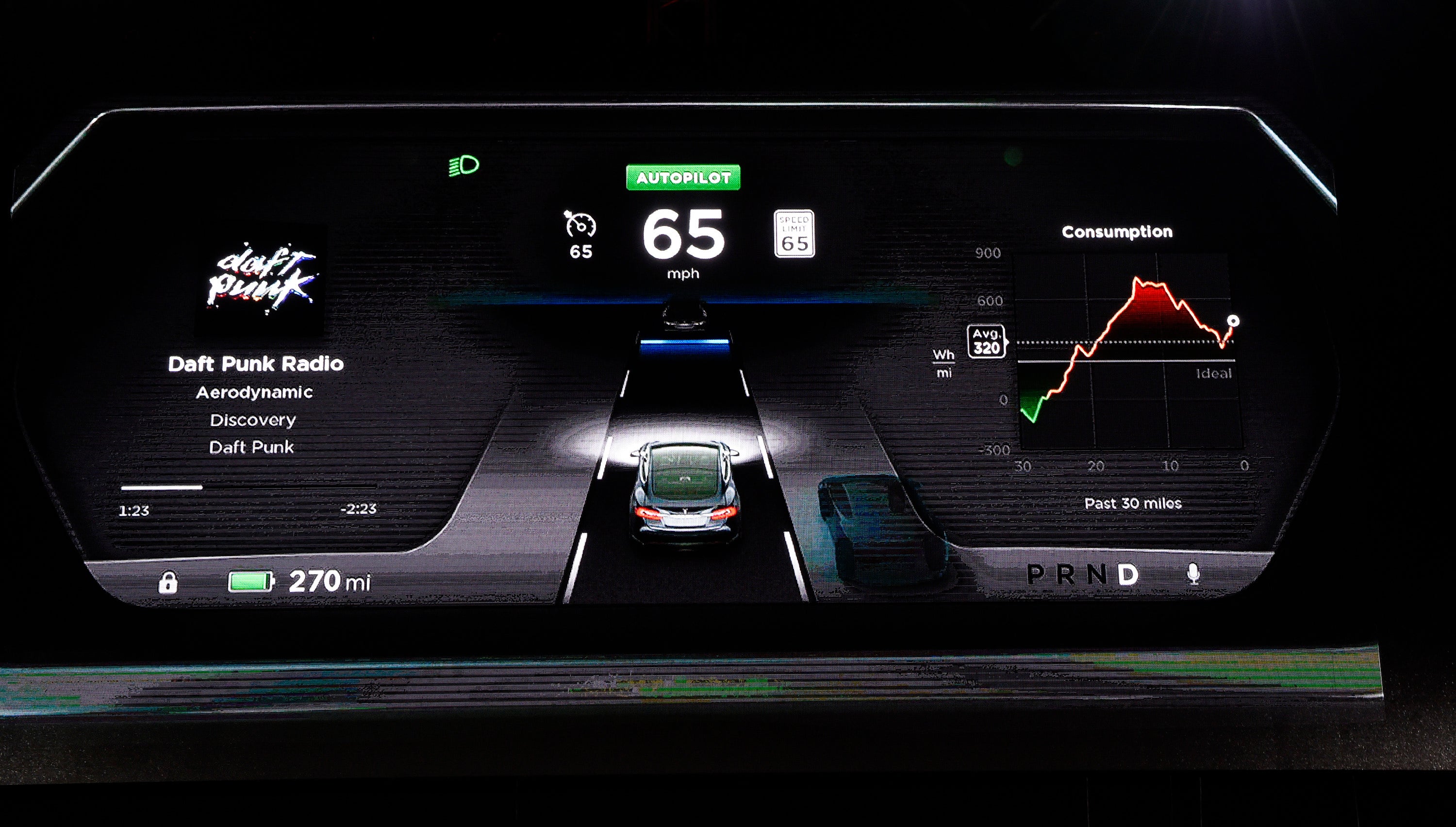

Lawyers for the plaintiffs in the Miami case argue that Tesla’s driver-assistance feature, called Autopilot, should have warned the driver and braked when his Model S sedan blew through flashing lights, a stop sign and a T-intersection at 62 miles an hour in the April 2019 crash.

Tesla said that drivers are warned not to rely on Autopilot, or its more advanced Full Self-Driving system. It say the fault entirely lies with the “distracted driver” just like so many other “accidents since cellphones were invented.”

McGee settled a separate suit brought by the family of Benavides and her severely injured boyfriend, Dillon Angulo.

Shown dashcam video Monday of his car jumping the road a split second before killing Benavides, McGee was clearly shaken. Asked if he had seen those images before, McGee pinched his lips, shook his head, then squeaked out a response: “No.”

Tesla’s attorney sought to show that McGee was fully to blame, asking if he had ever contacted Tesla for additional instructions about how Autopilot or any other safety features worked. McGee said he had not, though he was a heavy user of the features.

He said he had driven the same road home from work 30 or 40 times. Under questioning he also acknowledged he alone was responsible for watching the road and hitting the brakes.

But lawyers for the Benavides family had another chance to parry that line of argument and asked McGee if he would have taken his eyes off the road and reached for his phone had he been driving any car other than a Tesla on Autopilot.

McGee responded, “I don’t believe so.”

The case is expected to continue for two more weeks.

In the California case, the state’s Department of Motor Vehicles is arguing before an administrative judge that Tesla has misled drivers by exaggerating the capabilities of its Autopilot and Full Self-Driving features. A court filing claims even those feature names are misleading because they offer just partial self-driving

Musk has been warned by federal regulators to stop making public comments suggesting Full Self-Driving allows his cars to drive themselves because it could lead to overreliance on the system, resulting in possible crashes and deaths.

He also has run into trouble with regulators for Autopilot. In 2023, the company had to recall 2.3 million vehicles for problems with the technology and is now under investigation for saying it fixed the issue though it is unclear it has, according to regulatory documents.

The California case is expected to last another four days.