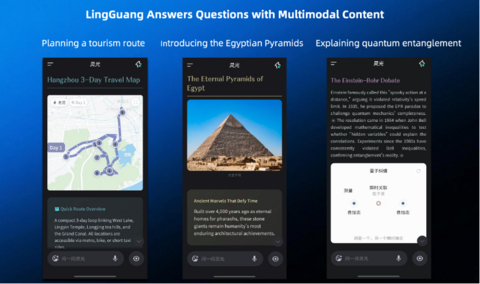

Ant Group Unveils China’s First Multimodal AI Assistant with Code-Driven Outputs

HANGZHOU, China, November 18, 2025–(BUSINESS WIRE)–Ant Group today launched LingGuang, a next-generation multimodal AI assistant and the first of its kind in China that interacts with users through code-driven outputs. Equipped with the capability to understand and produce language, image, voice and data, LingGuang delivers precise, structured responses to complex queries through 3D models, audio…